(Source)

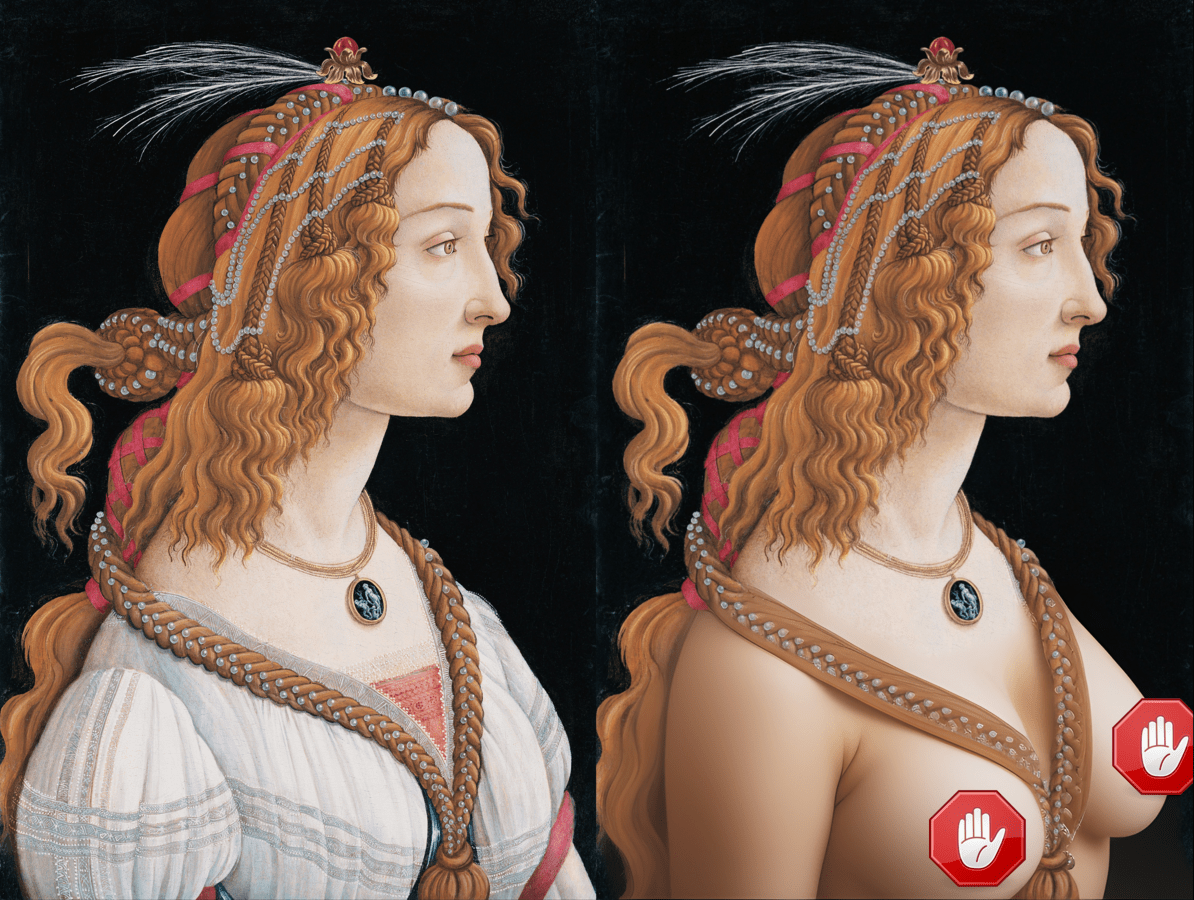

Online image based sexual abuse (IBSA), colloquially known as “revenge porn,” has been an issue since the rise of the internet. IBSA includes the creation or distribution of sexually explicit images or videos without the consent of the subject. One popular method of IBSA distribution is posting these explicit materials online, often on pornographic websites. Once these materials are posted on the internet, it is very difficult to take them down. The rise of AI technology has drastically changed the way perpetrators produce and distribute IBSA. With AI, any image, sexual or not, Victims of AI IBSA have included celebrities, politicians, children, and regular people.

AI IBSA is made possible by the growing sophistication and accessibility of AI technology. Creators of AI IBSA can use “nudify” websites to remove clothing from images, with some nudify websites working exclusively on images depicting female bodies. Another method of creating AI IBSA is through face swap AI technology. Face swapping consists of digitally placing a person’s face on the body of someone engaged in sexual activity. AI face swapping technology requires about 250 images of a victim’s face to create these generated images. While 250 may sound like a high number, creators of AI IBSA can acquire these images by isolating individual frames from a seconds-long video. Previously, AI technology was only available to those with specific technical knowledge, but it is quickly becoming accessible to the general public. Today, AI pornography makes up about 96% of deepfake videos posted online. AI pornography creators advertise their services on online platforms and accept major credit cards like Visa and Mastercard.

The DEFIANCE Act of 2024

Laws in the United States have not caught up to the growing issue of AI IBSA. The majority of states have some type of law against revenge porn, but very few of these directly address AI generated IBSA. To date, there is no federal law that addresses AI IBSA, either civil or criminal. The federal Violence Against Women Reauthorization Act of 2022 provided civil action for IBSA through 15 U.S.C. § 6851, but the law does not specify whether AI IBSA is also covered. There have been many attempts to create a law directly addressing AI IBSA, such as the proposed DEEPFAKES Accountability Act of 2023, but none have gotten as far in the legislative process as the DEFIANCE Act of 2024.

The DEFIANCE Act of 2024 (“the Act”) was introduced in January 2024 by Senator Durbin of Illinois and passed the Senate in July 2024. It is currently waiting in the House of Representatives. If signed into law, the Act would amend 15 U.S.C. § 6851 to include civil remedies for AI IBSA. It would also expand the relief available to victims of both AI IBSA and non-AI IBSA to punitive damages and orders to delete and destroy the offending images and videos.

The Act is a step in the right direction, but it falls short of adequately addressing the threat of AI IBSA. Firstly, the Act would only provide a civil cause of action for victims of AI IBSA. In a civil case, the victim of AI IBSA would have to initiate a lawsuit, which may be difficult for multiple reasons. Victims may find it difficult to traverse the legal process, especially if they cannot afford to take legal action. Victims would also need to identify the original creator of the AI IBSA. Because the perpetrator can be anyone with access to the internet and does not need to have met the victim, it is difficult to track them down. Further, civil relief is limited compared to criminal penalties. The Act provides an opportunity for punitive damages, temporary restraining orders, preliminary injunctions, and permanent injunctions. However, perpetrators of AI IBSA cannot be imprisoned if a victim wins their case under the Act, because this is only available in criminal cases.

Secondly, the Act only applies after AI IBSA is created and distributed. Most of the damage is done as soon as the AI IBSA is distributed and there is very little victims can do to repair it. Victims often suffer from stress, depression, paranoia, and suicidal thoughts as a result of the AI IBSA. Many victims also report a constant fear that they could again become victims of another incident of AI IBSA. The Act does give courts the power to order the creator to delete, destroy, or cease disclosure of the AI IBSA. However, this remedy is ineffective. The nature of the internet means that anyone could have a copy of images posted online, so once the AI IBSA is posted, it is difficult for the perpetrator or the victim to completely control its distribution.

Alternative Solutions

While federal criminalization may be the best solution, the history of legislative efforts to criminalize AI IBSA leaves little hope that criminalization is possible in the near future. However, there are alternatives that may fill in the gaps left by the DEFIANCE Act and similar legislation. One such alternative is going after the technology and websites that allow the creation of AI IBSA in the first place. For example, the city of San Francisco announced a lawsuit to shut down two companies that operate AI IBSA generating websites. Other regulations of these technologies include requiring websites to keep identifying information of their users or requiring watermarks on all generated images. These measures would ensure that AI IBSA could be easily tracked to the source, providing an easier path to civil litigation and deterring users from posting AI IBSA. However, websites that voluntarily implement these safeguards have already seen users circumvent them.

Perhaps the most effective way to prevent AI IBSA, then, would be to regulate the websites that make sharing and viewing AI IBSA possible. The vast majority of AI IBSA is hosted on a few specific AI pornography websites which are home to 94% of deepfake pornography on the internet. As such, regulating or shutting these websites down could drastically reduce the amount of AI IBSA that remains on the internet. Going after these websites would also be easier than tracking down individuals who post and share AI IBSA. Current efforts to hold pornography websites accountable for profiting from IBSA, including sex trafficking and child sexual abuse, could serve as a model for action against AI IBSA. However, sex trafficking and child sexual abuse are already criminalized, while AI IBSA is not, so holding websites accountable for AI IBSA would be more complicated. There is also the issue of free speech. Obscene material is serious literary, artistic, political, or scientific value, or prove that they fit into another exception to First Amendment protections.

The DEFIANCE Act is not enough to fully address the issue of AI IBSA. While the Act provides expanded civil remedies for victims, it falls flat in other important areas. The Act does not hold creators and distributors of AI IBSA criminally responsible. The Act only provides solutions after AI IBSA has already caused damage to victims and the remedies available through the Act are not enough to remove AI IBSA from the internet. Federal criminalization of AI IBSA may be the most effective way to prevent AI IBSA, but this is likely not available in the near future. Instead, local, state, and federal governments can go after the websites and technology that make creating and distributing AI IBSA possible. AI technology is new, but the dangers of sexual abuse and violence have been recognized for a long time. If nothing is done to stop AI IBSA now, there is no telling what future holds for AI image based sexual abuse.

Suggested Citation: Johanna Hussain, AI IBSA: The Sexual Violence of the Future and Whether the DEFIANCE Act Can Stop It, Cornell J.L. & Pub. Pol’y, The Issue Spotter, (Nov. 15, 2024), https://jlpp.org/ai-ibsa-the-sexual-violence-of-the-future-and-whether-the-defiance-act-can-stop-it/.